Lately, we’ve been hearing howAIis going to take over the world. Scientists are trying to create various models that could be implemented in many areas of life. And their effort isn’t fruitless. Artificial intelligence is already implemented in quite a lot of places. For instance, in the workplace, AI models are “hired” to do certain jobs, like answering questions for employees. Unfortunately, it doesn’t always work the way it’s coded, just like in today’s story.

More info:Reddit

Some say that one day, artificial intelligence might take over the world. But some of the current AI models prove that day is not today

Image credits:Hatice Baran (not the actual photo)

A company bought an expensive AI model to help employees with any questions they might have

Image credits:Ankit Rainloure (not the actual photo)

Image credits:Matheus Bertelli (not the actual photo)

Image credits:Alterokahn

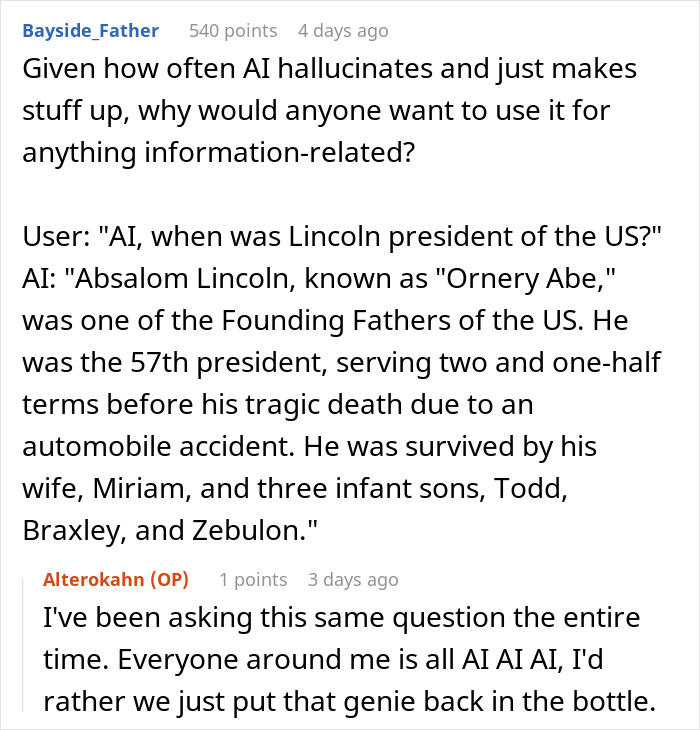

But the AI model ended up malfunctioning so badly that instead of employees asking it questions, they asked questions about it, as the model started giving made-up information

To justifylayoffs, the OP’s company purchased an expensive ChatGPT-based AI chat model with no name to answer internal questions the employees had.

Coming back to the story, the information given to the AI was very specific, so the model started telling people it wasn’t sure about the results. Shortly after it started, employees began to simply “GoogleIt.” And that’s not even the end. According to the post’s author, now the model simply makes stuff up and ignores the original questions.

The OP talked with the engineers in charge of the model, and they explained that the model has a list of 5-10 top-rateddocumentsit bases its answers on. If none of these documents have a straightforward answer, the AI model starts “hallucinating” and mismatching pieces together.

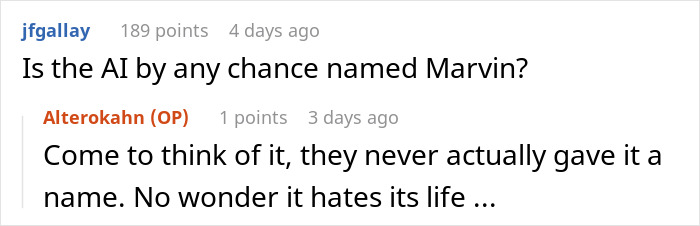

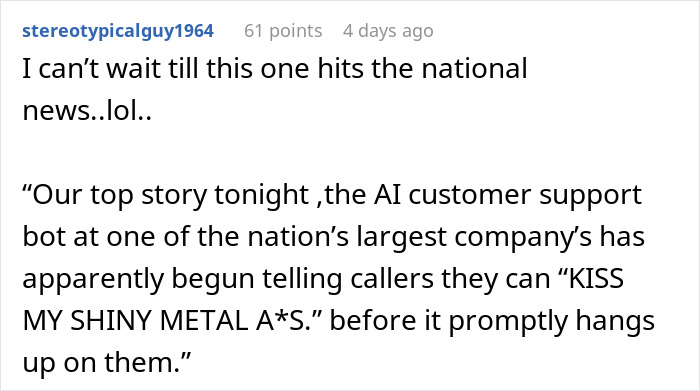

Some of the netizens found this situation funny and even made jokes like, “This AI entered its teenage years,” or they expressed how they felt kind of jealous of it, as it can simply tell people to Google the information they need. A few were just simply interested in what made the model malfunction this way.

Image credits:Lukas (not the actual photo)

But AI models can be used for way more than just answering questions. In fact, AI is so ingrained in our lives that we don’t even realize it. For example, nearly allsocial mediaplatforms use AI in some capacity. When you see recommendations of accounts you would like or simply when the platforms display your feed according to what you’re interested in.

Basically, it’s the same thing withNetflix —it uses AI to improve its content recommendations. Withonline stores,AI-based algorithms are used to predict, for example, what customers might want to buy. Again, the AI field is already so broad, and it’s growing every day, so it’s hard to keep track of every single thing in our lives that AI is in charge of.

Yet, experts voice that AI shouldn’t betrustedblindly — after all, it’s still a machine. It can be unpredictable, act immorally, or the algorithms it’s based on can be unclear.

In thefuture,it’s possible that artificial intelligence will evolve enough to overcome these flaws, but then other challenges will arise, such as the models potentially taking away jobs from people. These models even do this right now, or at least attempt to. As we can see in the Reddit story, the AI was bought to justify layoffs, but in the future, the possibility of this might increase.

Some internet folks thought this whole situation was hilarious, while others were interested in why such a smart technology started acting so stupidly

Thanks! Check out the results:

Funny